The Ethics of Artificial Intelligence: Examining the Moral Implications of AI Development and Implementation

Table Of Contents

Chapter ONE

1.1 Introduction 1.2 Background of Study

1.3 Problem Statement

1.4 Objective of Study

1.5 Limitation of Study

1.6 Scope of Study

1.7 Significance of Study

1.8 Structure of the Research

1.9 Definition of Terms

Chapter TWO

2.1 Overview of Artificial Intelligence 2.2 Ethical Theories and Frameworks

2.3 Ethical Issues in AI Development

2.4 Case Studies on AI Ethics

2.5 Regulations and Policies in AI

2.6 Ethical Decision-Making in AI

2.7 AI Bias and Fairness

2.8 Privacy and Data Protection in AI

2.9 Transparency and Accountability in AI

2.10 AI and the Future of Humanity

Chapter THREE

3.1 Research Design 3.2 Research Approach

3.3 Data Collection Methods

3.4 Sampling Techniques

3.5 Data Analysis Procedures

3.6 Ethical Considerations

3.7 Research Limitations

3.8 Validity and Reliability of Research Findings

Chapter FOUR

4.1 Overview of Research Findings 4.2 Ethical Implications of AI Development

4.3 Stakeholder Perspectives on AI Ethics

4.4 Addressing Bias and Discrimination in AI

4.5 Ensuring Transparency and Accountability

4.6 Recommendations for Ethical AI Development

4.7 Future Directions in AI Ethics Research

4.8 Comparative Analysis of AI Ethics Frameworks

Chapter FIVE

5.1 Summary of Findings 5.2 Conclusions

5.3 Contributions to Knowledge

5.4 Implications for Practice

5.5 Recommendations for Future Research

5.6 Conclusion and Final Remarks

Project Abstract

AbstractArtificial Intelligence (AI) has become increasingly pervasive in modern society, with applications ranging from autonomous vehicles to virtual assistants. While the technological advancements in AI present numerous benefits, they also raise profound ethical questions concerning the moral implications of its development and implementation. This research delves into the ethical considerations surrounding AI, aiming to provide a comprehensive analysis of the challenges and opportunities associated with its deployment. The study commences by introducing the concept of AI and its historical background, highlighting the evolution of AI technologies and their integration into various sectors. It then proceeds to elucidate the problem statement, emphasizing the ethical dilemmas that arise from the use of AI systems, such as issues related to privacy, bias, accountability, and job displacement. The objectives of the research are outlined, focusing on the exploration of ethical frameworks and guidelines to govern AI development. Limitations and scope of the study are identified to delineate the boundaries within which the research operates. The significance of the study is underscored, emphasizing the importance of addressing ethical concerns in AI to ensure its responsible and beneficial use. The structure of the research is outlined, providing a roadmap for the subsequent chapters, including the literature review, research methodology, discussion of findings, and conclusion. The literature review critically examines existing scholarship on AI ethics, encompassing diverse perspectives from philosophy, computer science, and social sciences. Key themes explored include ethical decision-making in AI systems, the impact of AI on human values, and the role of regulation in mitigating ethical risks. The review synthesizes theoretical frameworks and empirical studies to offer a comprehensive understanding of the ethical landscape in AI development. The research methodology section outlines the approach adopted for the study, including data collection methods, analysis techniques, and ethical considerations. The research design incorporates qualitative and quantitative data sources to triangulate findings and enhance the validity of the research outcomes. Ethical considerations are paramount in conducting research on AI ethics, emphasizing the importance of transparency, accountability, and stakeholder engagement. The discussion of findings chapter presents the results of the study, highlighting key ethical challenges and opportunities in AI development and implementation. The findings are contextualized within the broader ethical discourse, drawing on theoretical insights and empirical evidence to inform policy recommendations and best practices. The implications of the research findings are discussed in relation to existing ethical frameworks and the need for ongoing dialogue and collaboration among stakeholders. In conclusion, this research underscores the critical importance of ethical considerations in AI development and implementation. By examining the moral implications of AI technologies, this study contributes to a deeper understanding of the ethical challenges posed by AI and offers insights into how these challenges can be addressed through ethical guidelines, regulatory frameworks, and stakeholder engagement. The research calls for a proactive and interdisciplinary approach to AI ethics, emphasizing the shared responsibility of researchers, developers, policymakers, and the public in shaping the ethical trajectory of AI innovation.

Project Overview

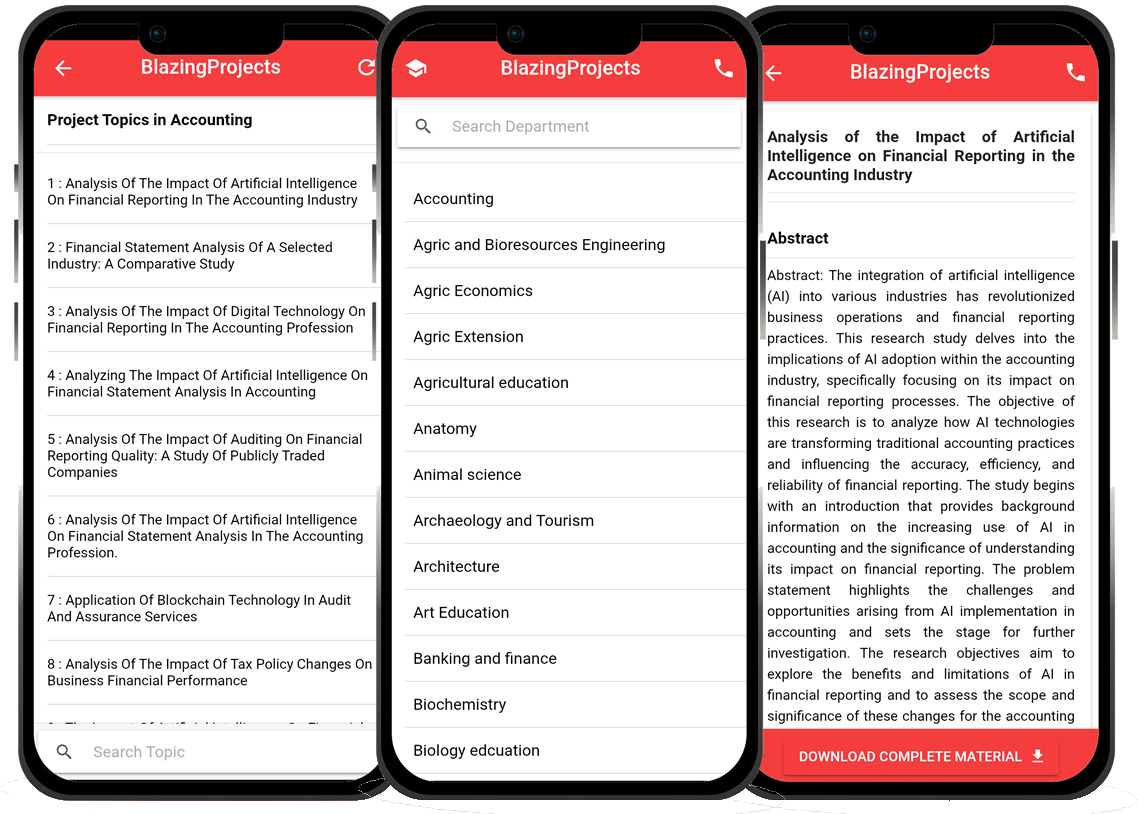

The research project titled "The Ethics of Artificial Intelligence: Examining the Moral Implications of AI Development and Implementation" delves into the ethical considerations surrounding the ever-evolving field of artificial intelligence (AI). As AI technologies continue to advance and become more integrated into various aspects of society, it is crucial to critically analyze the moral implications associated with their development and deployment. The project aims to explore the ethical challenges posed by AI technologies, particularly in terms of decision-making processes, privacy concerns, bias and discrimination, accountability, and the potential impact on societal values and norms. By examining these moral implications, the research seeks to contribute to a better understanding of how AI systems can be developed and implemented in a way that upholds ethical principles and respects human values. Through a comprehensive review of existing literature on AI ethics, the project will analyze the current state of ethical frameworks and guidelines in place for AI development. By synthesizing diverse perspectives from philosophy, technology, and social sciences, the research will identify key ethical issues that arise in the context of AI, such as transparency, fairness, autonomy, and the ethical responsibility of AI creators and users. Furthermore, the research will investigate the practical implications of ethical considerations in AI implementation, exploring how ethical principles can be translated into real-world applications and policies. By examining case studies and examples of AI systems in various domains, the project will highlight the ethical dilemmas faced by developers, policymakers, and users when integrating AI technologies into different societal contexts. Ultimately, the research aims to provide insights and recommendations for promoting ethical AI development and implementation practices. By fostering a deeper understanding of the moral implications of AI technologies, the project seeks to contribute to the responsible and sustainable advancement of AI systems that align with ethical values and promote the well-being of individuals and society as a whole.Blazingprojects Mobile App

📚 Over 50,000 Project Materials

📱 100% Offline: No internet needed

📝 Over 98 Departments

🔍 Software coding and Machine construction

🎓 Postgraduate/Undergraduate Research works

📥 Instant Whatsapp/Email Delivery

Related Research

Exploring the Ethics of Artificial Intelligence: Implications for Human Society...

The project topic, "Exploring the Ethics of Artificial Intelligence: Implications for Human Society," delves into the complex and evolving relationshi...

The Ethics of Artificial Intelligence: Exploring the Moral Implications of AI System...

The project titled "The Ethics of Artificial Intelligence: Exploring the Moral Implications of AI Systems" aims to delve into the intricate ethical co...

Exploring the Ethics of Artificial Intelligence in Decision-Making Processes...

The project titled "Exploring the Ethics of Artificial Intelligence in Decision-Making Processes" delves into the critical examination of the ethical ...

Exploring the Ethics of Artificial Intelligence: Implications for Society...

Overview: The rapid advancement of artificial intelligence (AI) technologies has brought forth numerous ethical considerations that have profound implications ...

Exploring the Ethics of Artificial Intelligence: Can Machines Have Moral Responsibil...

The research project titled "Exploring the Ethics of Artificial Intelligence: Can Machines Have Moral Responsibilities?" delves into the intricate int...

The Ethics of Artificial Intelligence: Examining the Moral Implications of AI Develo...

The project topic "The Ethics of Artificial Intelligence: Examining the Moral Implications of AI Development and Implementation" delves into the intri...

The Ethics of Artificial Intelligence: Exploring the Moral Implications of AI Develo...

The project on "The Ethics of Artificial Intelligence: Exploring the Moral Implications of AI Development and Implementation" delves into the complex ...

The Ethics of Artificial Intelligence: Examining Moral Responsibility in Autonomous ...

The project topic, "The Ethics of Artificial Intelligence: Examining Moral Responsibility in Autonomous Systems," delves into the complex intersection...

The Moral Implications of Artificial Intelligence: Examining the Ethics of AI Decisi...

The project titled "The Moral Implications of Artificial Intelligence: Examining the Ethics of AI Decision-Making" delves into the complex intersectio...