Lasalle invariance principle for ordinary dierential equations and applications

Table Of Contents

<p> Acknowledgment i<br>Certication ii<br>Approval iii<br>Introduction v<br>Dedication vi<br>1 Preliminaries 2<br>1.1 Denitions and basic Theorems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2<br>1.2 Exponential of matrices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4<br>2 Basic Theory of Ordinary Dierential Equations 7<br>2.1 Denitions and basic properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7<br>2.2 Continuous dependence with respect to the initial conditions . . . . . . . . . . . . 11<br>2.3 Local existence and blowing up phenomena for ODEs . . . . . . . . . . . . . . . . 12<br>2.4 Variation of constants formula . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15<br>3 Stability via linearization principle 21<br>3.1 Denitions and basic results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21<br>4 Lyapunov functions and LaSalle’s invariance principle 26<br>4.1 Denitions and basic results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27<br>4.2 Instability Theorem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29<br>4.3 How to search for a Lyapunov function (variable gradient method) . . . . . . . . . 30<br>4.4 LaSalle’s invariance principle . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30<br>4.5 Barbashin and Krasorskii Corollaries . . . . . . . . . . . . . . . . . . . . . . . . . . 32<br>4.6 Linear systems and linearization . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36<br>5 More applications 40<br>5.1 Control design based on lyapunov’s direct method . . . . . . . . . . . . . . . . . . 41<br>Conclusion 48<br>Bibliography 48 <br></p>

Project Abstract

AbstractThe Lasalle invariance principle is a powerful tool in the analysis of ordinary differential equations (ODEs) and dynamical systems. This principle provides a criterion for determining the stability of equilibrium points in ODEs without explicitly solving the system. The key idea behind the Lasalle invariance principle is to construct a Lyapunov function that decreases along the trajectories of the system until it reaches a region where the derivative of the Lyapunov function becomes zero. This region is known as the invariant set, and the equilibrium point within this set is said to be asymptotically stable. In this research project, we explore the Lasalle invariance principle for ODEs and its applications in various fields such as control theory, biology, and engineering. We begin by presenting the theoretical background of the Lasalle invariance principle, including the statements and proofs of the main theorems. We then discuss different techniques for constructing Lyapunov functions and determining the stability of equilibrium points using the Lasalle invariance principle. Furthermore, we investigate the applications of the Lasalle invariance principle in real-world problems. For instance, in control theory, the principle can be used to analyze the stability of control systems and design feedback controllers to ensure stability. In biology, the principle can help in studying the dynamics of ecological systems and population models. In engineering, the principle can be applied to analyze the stability of mechanical systems and electrical circuits. Moreover, we explore extensions and generalizations of the Lasalle invariance principle, such as the LaSalle-Razumikhin method for time-varying systems and the LaSalle-type theorems for non-autonomous systems. These extensions allow for the analysis of more complex dynamical systems with time-varying parameters and external inputs. Overall, the Lasalle invariance principle is a fundamental tool in the study of ODEs and dynamical systems, providing insights into the stability and behavior of equilibrium points. By understanding and applying this principle, researchers and practitioners can analyze and control a wide range of systems in various disciplines, making it a valuable asset in both theoretical research and practical applications.

Project Overview

PRELIMINARIES

1.1 Denitions and basic Theorems

In this chapter, we focussed on the basic concepts of the ordinary dierential equations. Also, we

emphasized on relevant theroems in ordinary dierential equations.

Denition 1.1.1 An equation containing only ordinary derivatives of one or more dependent vari-

ables with respect to a single independent variable is called an ordinary dierential equation ODE.

The order of an ODE is the order of the highest derivative in the equation. In symbol, we can

express an n-th order ODE by the form

x(n) = f(t; x; :::; x(nô€€€1)) (1.1.1)

Denition 1.1.2 (Autonomous ODE ) When f is time-independent, then (1.1.1) is said to be

an autonomous ODE. For example,

x0(t) = sin(x(t))

Denition 1.1.3 (Non-autonomous ODE ) When f is time-dependent, then (1.1.1) is said to

be a non autonomous ODE. For example,

x0(t) = (1 + t2)y2(t)

Denition 1.1.4 f : Rn ! Rn is said to be locally Lipschitz, if for all r > 0 there exists k(r) > 0

such that

kf(x) ô€€€ f(y)k k(r)kx ô€€€ yk; for all x; y 2 B(0; r):

f : Rn ! Rn is said to be Lipschitz, if there exists k > 0 such that

kf(x) ô€€€ f(y)k kkx ô€€€ yk; for all x; y 2 Rn:

Denition 1.1.5 (Initial value problem (IVP) Let I be an interval containing x0, the follow-

ing problem (

x(n)(t) = f(t; x(t); :::; x(nô€€€1)(t))

x(t0) = x0; x0(t0) = x1; :::; x(nô€€€1)(t0) = xnô€€€1

(1.1.2)

is called an initial value problem (IVP).

x(t0) = x0; x0(t0) = x1; :::; x(nô€€€1)(t0) = xnô€€€1

are called initial condition.

2

Lemma 1.1.6 [9](Gronwall’s Lemma) Let u; v : [a; b] ! R+ be continuous such that there exists

> 0 such that

u(x) +

x

a

u(s)v(s)ds; for all x 2 [a; b]:

Then,

u(x) e

x

a

v(s)ds

; for all x 2 [a; b]:

Proof .

u(x) +

x

a

u(s)v(s)ds

implies that

u(x)

+

x

a

u(s)v(s)ds

v(x):

So,

u(x)v(x)

+

x

a

u(s)v(s)ds

v(x);

which implies that

x

a

u(x)v(x)

+

x

a

u(s)v(s)ds

ds

x

a

v(x)ds:

So, taking exponential of both side we get

u(x) +

x

a

u(s)v(s)ds

x

a

u(s)v(s)ds:

Thus,

u(x) e

x

a

v(s)ds

; x 2 [a; b]:

Corollary 1.1.7 Let u; v : [a; b] ! R+ be continuous such that

u(x)

x

a

u(s)v(s)ds; for all x 2 [a; b]:

Then, u = 0 on [a; b].

Proof . Now,

u(x)

x

a

u(s)v(s)ds

implies that

u(x)

x

a

u(s)v(s)ds

1

n

+

x

a

u(s)v(s)ds; for all n 1:

So, by Gronwall’s lemma,

u(x)

1

n

e

x

a u(s)v(s)ds;

so as

n ! 1; u(x) ! 0:

Thus, u(x) = 0, since u(x) 0. Hence, u = 0 on [a; b].

3

1.2 Exponential of matrices

Denition 1.2.1 Let A 2 Mnn(R), then eA is an n n matrix given by the power series

eA =

1X

k=0

Ak

k!

The series above converges absolutely for all A 2 Mnn(R)

Proof . The n-th partial sum is

Sn =

Xn

k=0

Ak

k!

So, let n > m Then,

Sn ô€€€ Sm =

Xn

k=m+1

Ak

k!

:

So,

kSn ô€€€ Smk

Xn

k=m+1

kAkk

k!

:

So as

m ! 1; kSn ô€€€ Smk ! 0

So, (Sn)n is Cauchy. Thus, converges.

Theorem 1.2.2 [3](Cayley Hamilton Theorem)

Let A 2 Mnn(R) and () = det(I ô€€€ A) its characteristic polynomial then

(A) = 0:

Proof . Let A 2 Mnn(R);

() = det(I ô€€€ A) = c0 + c1 + c22 + ::: + cnn:

adj(A ô€€€ I) = B0 + B1 + B22 + ::: + Bnô€€€2nô€€€2 + Bnô€€€1nô€€€1;

where Bi 2 Mnn(R) for i = 0; 1; 2; :::; n; but, from linear algebra we have that

Aô€€€1 =

adj(A)

det(A)

;

where adj(A) denotes the adjugate or classical adjoint of A. So,

det(I ô€€€ tA)I = (I ô€€€ tA)adj(I ô€€€ tA):

(A ô€€€ I)(B0 + B1 + B22 + ::: + Bnô€€€2nô€€€2 + Bnô€€€1nô€€€1) = (c0 + c1 + c22 + ::: + cnn)I:

Observe that the entries in adj(I ô€€€tA) are polynomials in of degree at most nô€€€1. So, Bi is the

zero matrix for i = n. Equating the coecients of n on both sides gives

c0I + c1A + c2A2 + ::: + cnAn = 0:

Thus,

(A) = 0:

4

Example 1.2.3 (Application of Cayley Hamilton Theorem)

Find etA for A =

0 1

ô€€€1 0

!

Solution:

The characteristic equation is s2 + 1 = 0, and the eigenvalues are 1 = i, and 2 = ô€€€i. So, by

Theorem 1.2.2 we have that,

etA = 0I + 1A;

where we are to nd the value of 0, and 1. So,

eti = cos t + i sin t = 0 + 1i

eô€€€ti = cos t ô€€€ i sin t = 0 ô€€€ 1i

which implies that 0 = cos t, and 1 = sin t. So,

etA = cos(t)I + sin(t)A =

cos t sin t

ô€€€sin t cos t

!

Theorem 1.2.4 [11] Let A;B 2 Mnn(R). Then,

(1) If 0 denotes the zero matrix, then e0 = I, the identity matrix.

(2) If A is invertible, then eABAô€€€1

= AeBAô€€€1.

Proof . Recall that, for all integers s 0, we have (ABAô€€€1)s = ABsAô€€€1. Now,

eABAô€€€1

= I + ABAô€€€1 +

(ABAô€€€1)2

2!

+ :::

= I + ABAô€€€1 +

AB2Aô€€€1

2!

+ :::

= A(I + B +

B2

2!

+ :::)Aô€€€1

= AeBAô€€€1:

(3) If A is symmetric such that A = AT , then

e(AT ) = (eA)T :

Proof .

eA =

1X

k=0

Ak

k!

:

Then

eAT

=

1X

k=0

(AT )k

k!

=

1X

k=0

(Ak)T

k!

= (

1X

k=0

Ak

k!

)T = (eA)T :

(4) If AB = BA, then

eA+B = eAeB:

Proof .

eAeB = (I + A +

A2

2!

+

A3

3!

+ :::)(I + B +

B2

2!

+

B3

3!

+ :::)

= (

1X

k=0

Ak

k!

)(

1X

j=0

Bj

j!

)

=

1X

k=0

1X

j=0

(A + B)k+j

j!k!

5

Put m = j + k, then j = m ô€€€ k then from the binomial theorem that

eAeB =

1X

m=0

1X

k=0

AmBmô€€€k

(m ô€€€ k)!k!

=

1X

m=0

Am

m!

1X

k=0

m!

(m ô€€€ k)!

Bmô€€€k

k!

=

1X

m=0

(A + B)m

m!

= eA+B:

Theorem 1.2.5 [9]

detA

dt

= AetA = etAA; for t 2 R:

Proof . x(t; x0) = etAx0. Then,

dx(t; x0)

dt

= etAx0A =

1X

k=0

tkAk

k!

x0A = ( lim

n!1

Xn

k=0

tkAk

k!

)x0A

= lim

n!1

Xn

k=0

tkAk+1

k!

x0 = lim

n!1

Xn

k=0

AtkAk

k!

x0 = A

1X

k=0

tkAk

k!

x0 = AetAx0

So,

detA

dt

= AetA = etAA:

Proposition 1.2.6 The solution x(:; x0) of the following linear space

(

x0(t) = Ax(t); t 2 R

x(0) = x0 2 Rn

where A 2 Mnn(R), is given by

x(t; x0) = etAx0:

6

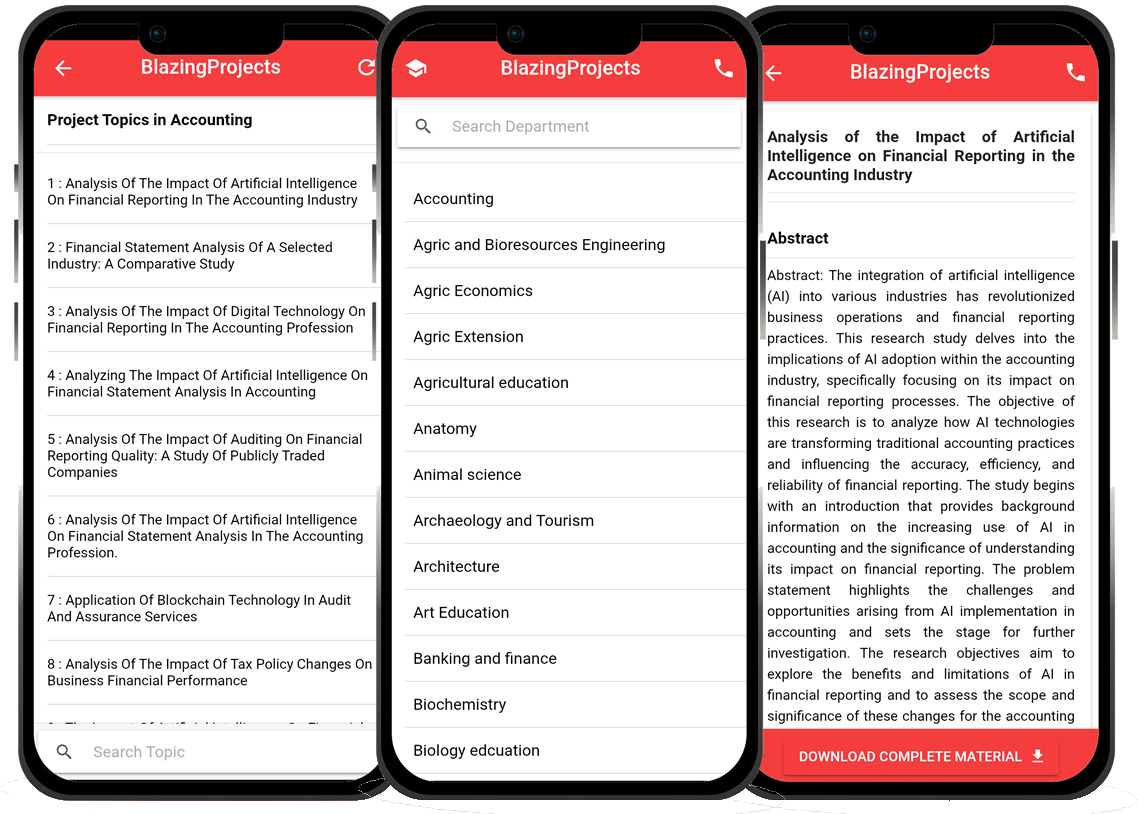

Blazingprojects Mobile App

📚 Over 50,000 Project Materials

📱 100% Offline: No internet needed

📝 Over 98 Departments

🔍 Software coding and Machine construction

🎓 Postgraduate/Undergraduate Research works

📥 Instant Whatsapp/Email Delivery

Related Research

Predicting Disease Outbreaks Using Machine Learning and Data Analysis...

The project topic, "Predicting Disease Outbreaks Using Machine Learning and Data Analysis," focuses on utilizing advanced computational techniques to ...

Implementation of a Real-Time Facial Recognition System using Deep Learning Techniqu...

The project on "Implementation of a Real-Time Facial Recognition System using Deep Learning Techniques" aims to develop a sophisticated system that ca...

Applying Machine Learning for Network Intrusion Detection...

The project topic "Applying Machine Learning for Network Intrusion Detection" focuses on utilizing machine learning algorithms to enhance the detectio...

Analyzing and Improving Machine Learning Model Performance Using Explainable AI Tech...

The project topic "Analyzing and Improving Machine Learning Model Performance Using Explainable AI Techniques" focuses on enhancing the effectiveness ...

Applying Machine Learning Algorithms for Predicting Stock Market Trends...

The project topic "Applying Machine Learning Algorithms for Predicting Stock Market Trends" revolves around the application of cutting-edge machine le...

Application of Machine Learning for Predictive Maintenance in Industrial IoT Systems...

The project topic, "Application of Machine Learning for Predictive Maintenance in Industrial IoT Systems," focuses on the integration of machine learn...

Anomaly Detection in Internet of Things (IoT) Networks using Machine Learning Algori...

Anomaly detection in Internet of Things (IoT) networks using machine learning algorithms is a critical research area that aims to enhance the security and effic...

Anomaly Detection in Network Traffic Using Machine Learning Algorithms...

Anomaly detection in network traffic using machine learning algorithms is a crucial aspect of cybersecurity that aims to identify unusual patterns or behaviors ...

Predictive maintenance using machine learning algorithms...

Predictive maintenance is a proactive maintenance strategy that aims to predict equipment failures before they occur, thereby reducing downtime and maintenance ...