Development of an enhanced check pointing technique in grid computing using programmer level controls

Table Of Contents

Chapter ONE

1.1 Introduction 1.2 Background of Study

1.3 Problem Statement

1.4 Objective of Study

1.5 Limitation of Study

1.6 Scope of Study

1.7 Significance of Study

1.8 Structure of the Research

1.9 Definition of Terms

Chapter TWO

2.1 Overview of Grid Computing 2.2 Evolution of Checkpointing Techniques

2.3 Types of Checkpointing in Grid Computing

2.4 Challenges in Existing Checkpointing Techniques

2.5 Theoretical Frameworks in Checkpointing

2.6 State-of-the-Art Technologies in Grid Checkpointing

2.7 Best Practices in Grid Checkpointing

2.8 Comparative Analysis of Checkpointing Techniques

2.9 Future Trends in Grid Checkpointing

2.10 Summary of Literature Review

Chapter THREE

3.1 Research Design 3.2 Population and Sampling Techniques

3.3 Data Collection Methods

3.4 Data Analysis Procedures

3.5 Research Instrumentation

3.6 Ethical Considerations

3.7 Reliability and Validity of Research

3.8 Limitations of Research Methodology

Chapter FOUR

4.1 Data Analysis and Interpretation 4.2 Demographic Analysis of Participants

4.3 Checkpointing Performance Metrics

4.4 Comparison with Existing Techniques

4.5 Impact of Programmer Level Controls

4.6 Case Studies and Use Cases

4.7 Discussion on Findings

4.8 Recommendations for Implementation

Chapter FIVE

5.1 Conclusion and Summary 5.2 Summary of Findings

5.3 Contributions to Knowledge

5.4 Implications for Future Research

5.5 Practical Applications of Enhanced Checkpointing

Project Abstract

Grid computing is a collection of computer resources from multiple locations assembled to provide computational services, storage, data or application services. Grid computing users gain access to computing resources with little or no knowledge of where those resources are located or what the underlying technologies, hardware, operating system, and so on are. Reliability and performance are among the key challenges to deal with in grid computing environments. Accordingly, grid scheduling algorithms have been proposed to reduce the likelihood of resource failure and to reduce the overhead of recovering from resource failure. Checkpointing is one of the faulttolerance techniques when resources fail. This technique reduces the work lost due to resource faults but can introduce significant runtime overhead. This research provided an enhanced checkpointing technique that extends a recent research and aims at lowering the runtime overhead of checkpoints. The results of the simulation using GridSim showed that keeping the number of resources constant and varying the number of gridlets, improvements of up to 9%, 11%, and 11% on throughput, makespan and turnaround time, respectively, were achieved while varying the number of resources and keeping the number of gridlets constant, improvements of up to 8%, 11%, and 9% on throughput, makespan and turnaround time, respectively, were achieved. These results indicate the potential usefulness of our research contribution to applications in practical grid computing environments.

Project Overview

INTRODUCTION

1.1 Background of the Study

1.2 Motivation

The ability to checkpoint a running application and restart it later can provide many useful benefits like fault recovery, advanced resource sharing, dynamic load balancing and improved service availability. A fault-tolerant service is essential to satisfy QoS requirements in grid computing. However, excessive checkpointing results in performance degradation. Thus there is the need to improve the performance by reducing the number of times that checkpointing is invoked.

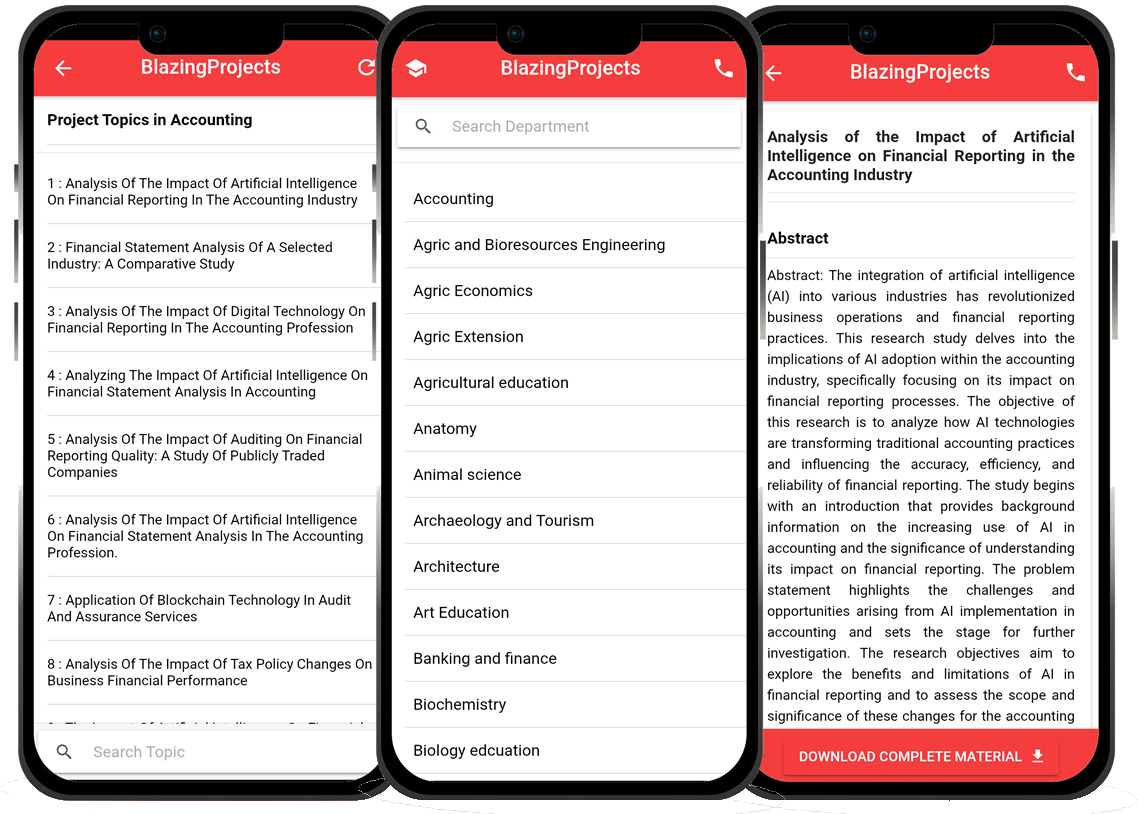

Blazingprojects Mobile App

📚 Over 50,000 Project Materials

📱 100% Offline: No internet needed

📝 Over 98 Departments

🔍 Software coding and Machine construction

🎓 Postgraduate/Undergraduate Research works

📥 Instant Whatsapp/Email Delivery

Related Research

Predicting Disease Outbreaks Using Machine Learning and Data Analysis...

The project topic, "Predicting Disease Outbreaks Using Machine Learning and Data Analysis," focuses on utilizing advanced computational techniques to ...

Implementation of a Real-Time Facial Recognition System using Deep Learning Techniqu...

The project on "Implementation of a Real-Time Facial Recognition System using Deep Learning Techniques" aims to develop a sophisticated system that ca...

Applying Machine Learning for Network Intrusion Detection...

The project topic "Applying Machine Learning for Network Intrusion Detection" focuses on utilizing machine learning algorithms to enhance the detectio...

Analyzing and Improving Machine Learning Model Performance Using Explainable AI Tech...

The project topic "Analyzing and Improving Machine Learning Model Performance Using Explainable AI Techniques" focuses on enhancing the effectiveness ...

Applying Machine Learning Algorithms for Predicting Stock Market Trends...

The project topic "Applying Machine Learning Algorithms for Predicting Stock Market Trends" revolves around the application of cutting-edge machine le...

Application of Machine Learning for Predictive Maintenance in Industrial IoT Systems...

The project topic, "Application of Machine Learning for Predictive Maintenance in Industrial IoT Systems," focuses on the integration of machine learn...

Anomaly Detection in Internet of Things (IoT) Networks using Machine Learning Algori...

Anomaly detection in Internet of Things (IoT) networks using machine learning algorithms is a critical research area that aims to enhance the security and effic...

Anomaly Detection in Network Traffic Using Machine Learning Algorithms...

Anomaly detection in network traffic using machine learning algorithms is a crucial aspect of cybersecurity that aims to identify unusual patterns or behaviors ...

Predictive maintenance using machine learning algorithms...

Predictive maintenance is a proactive maintenance strategy that aims to predict equipment failures before they occur, thereby reducing downtime and maintenance ...