KNOWLEDGE OF TEST CONSTRUCTION PROCEDURES AMONG LECTURERS IN IGNATIUS AJURU UNIVERSITY OF EDUCATION, PORT HARCOURT, NIGERIA

Table Of Contents

Chapter ONE

1.1 Introduction1.2 Background of Study

1.3 Problem Statement

1.4 Objective of Study

1.5 Limitation of Study

1.6 Scope of Study

1.7 Significance of Study

1.8 Structure of the Research

1.9 Definition of Terms

Chapter TWO

2.1 Overview of Test Construction Procedures2.2 Importance of Test Construction

2.3 Principles of Test Construction

2.4 Types of Test Items

2.5 Steps in Test Construction

2.6 Validity and Reliability in Test Construction

2.7 Challenges in Test Construction

2.8 Innovations in Test Construction

2.9 Best Practices in Test Construction

2.10 Comparison of Test Construction Methods

Chapter THREE

3.1 Research Design3.2 Population and Sampling

3.3 Data Collection Methods

3.4 Research Instruments

3.5 Data Analysis Techniques

3.6 Ethical Considerations

3.7 Pilot Study

3.8 Data Validity and Reliability

Chapter FOUR

4.1 Overview of Findings4.2 Demographic Analysis

4.3 Test Construction Knowledge Levels

4.4 Factors Influencing Knowledge Levels

4.5 Comparison of Departments

4.6 Recommendations for Improvement

4.7 Implications for Educational Practice

4.8 Future Research Directions

Chapter FIVE

5.1 Summary of Findings5.2 Conclusion

5.3 Contributions to Knowledge

5.4 Practical Implications

5.5 Recommendations

5.6 Areas for Future Research

Thesis Abstract

ABSTRACT

The study was conducted to assess the knowledge of lecturers on test construction procedures. The study adopted an analytical descriptive survey design. One research question and four hypotheses guided the study. It involved a sample of 200 lecturers drawn from 440 teaching members of staff of the university. A self-structured instrument was used for data collection. The research question was answered using mean scores while independent t-test and ANOVA were used to analyze the hypotheses at 0.05 level of significance. Results revealed high knowledge of test construction procedures by the lecturers. It was also found that lecturers’ knowledge of test construction procedures did not differ significantly based on gender, years of experience, professional training and educational qualification. Keywords Test construction Procedures, Item Analysis, Test Blue Print, Knowledge

Thesis Overview

1.0 INTRODUCTION

The business of teaching and learning cannot be complete without a periodic examination of the learners to determine if set objectives are being achieved. In the university each lecturer is expected to quantify how much the students have achieved from a course of instruction, this is done through the administration of tests by the lecturers who may not have adequate knowledge of test construction procedures, hence most often one encounters question papers that lack the basic psychometric properties (i.e validity, reliability, and usability). The most common tests used by lecturers are teacher–made achievement tests as against standardized tests which have the psychometric properties established. For achievement test, the most important validities to establish are face and content validities. Face validity is concerned with level of English used, if the items are ambiguous, if it is multiple choice you check if they are properly keyed, if the keys come in a pattern, and if there are overlapping items. It is also very important to establish content validity of an achievement test as it is crucial that the test covers the content area the learners have been exposed to; reliability and usability of the tests are also established as achievement is a latent trait. All these are incorporated in test construction procedures which each lecturer should be aware of and follow to be able to set good tests. As the power to assess the students rest on the lecturers. One would expect adequate measures to help lecturers acquire the skills in test construction but this is not the case. To confirm this, Izard (2005) observed that most teacher-made tests assess mainly the lower level processes as Bloom’s taxonomy of educational objectives specified for the cognitive domain. It becomes pertinent to guide lecturers on test construction procedures, which involves three major steps, (a). Test planning, where you plan the type of test you want to construct, this encompasses things like test format, the number of items to construct, determining the objectives to be assessed and drawing the test blueprint. (b) Item writing: Items are written out bearing in mind ways of improving essay or objective test items, after which the test is given out to other content specialists to establish face and or content validity. The test is then given to an equivalent group to the people the test is intended for trial testing, thereafter item analysis is by calculating the difficulty and discrimination indices of the items. Items are then selected based on the appropriate levels of these indices for norm and criterion-referenced tests. The importance of teachers setting appropriate tests for their students is inarguable considering the value of test scores given by teachers. Researchers have stressed that teacher’s competence greatly impacts on the quality of tests constructed (Chan, 2009, Darling-Hammond, 2012). Marso and Figge (1989) investigated the extent to which supervisors, principals, and teachers agree in assessing their proficiency in testing. The study demonstrated proficiency in assessment skills of the participants. Results showed teachers rating themselves higher than principals while principals rated themselves higher than supervisors. Generally, it was found that they all needed more training in test construction skill.

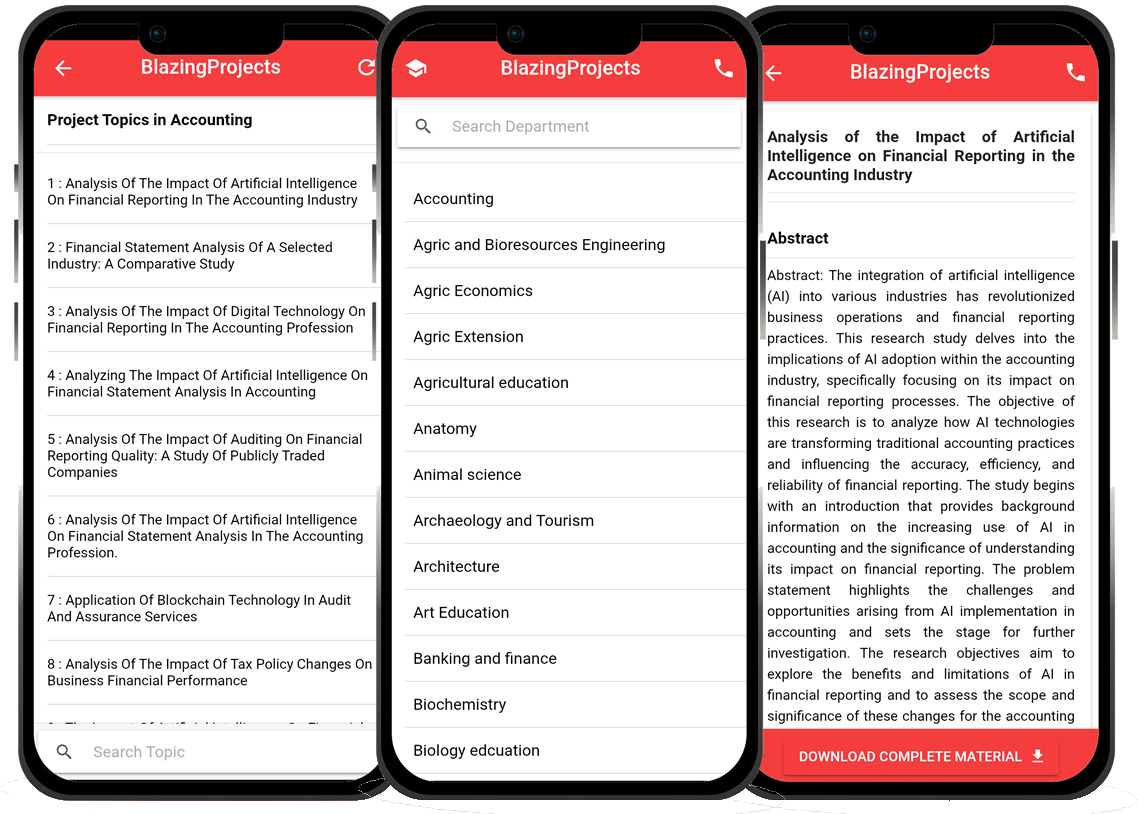

Blazingprojects Mobile App

📚 Over 50,000 Research Thesis

📱 100% Offline: No internet needed

📝 Over 98 Departments

🔍 Thesis-to-Journal Publication

🎓 Undergraduate/Postgraduate Thesis

📥 Instant Whatsapp/Email Delivery

Related Research

Exploring the use of chemistry in promoting global citizenship education...

...

Analyzing the impact of incorporating chemistry in sustainable education practices a...

...

Assessing the effectiveness of using chemistry in sustainable healthcare education...

...

Investigating the Role of Chemistry in Sustainable Healthcare Education...

...

Exploring the Use of Chemistry in Sustainable Community Development Education...

...

Analyzing the impact of incorporating chemistry in sustainable living education...

...

Analyzing the effectiveness of using chemistry in sustainable business practices as...

...

Investigating the Role of Chemistry in Sustainable Technology Education...

...

Exploring the Use of Chemistry in Sustainable Product Design Education...

...